YouTuber James Bruton wanted to create a prosthetic arm with natural control. At first, the idea was to use a brain-computer interface, but obtaining reliable data this way proved more difficult than expected.

So James pivoted to using machine learning to train the arm to mimic his real arm based on the position and posture of other parts of his body — using a bunch of Teensy boards while he was at it!

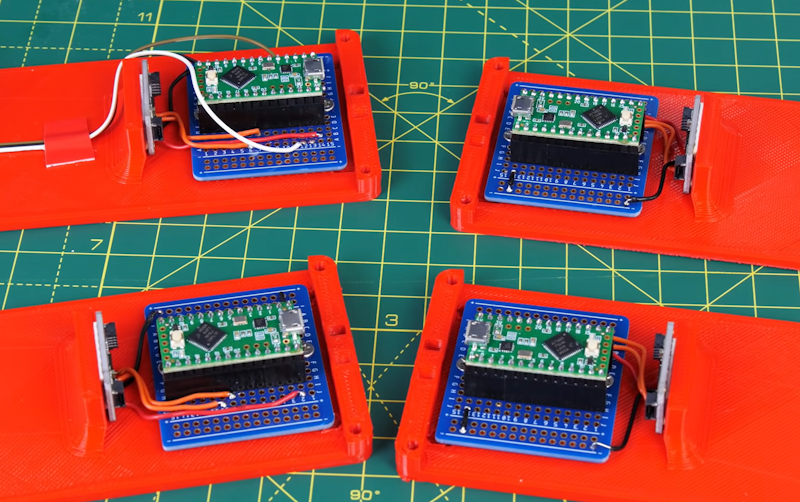

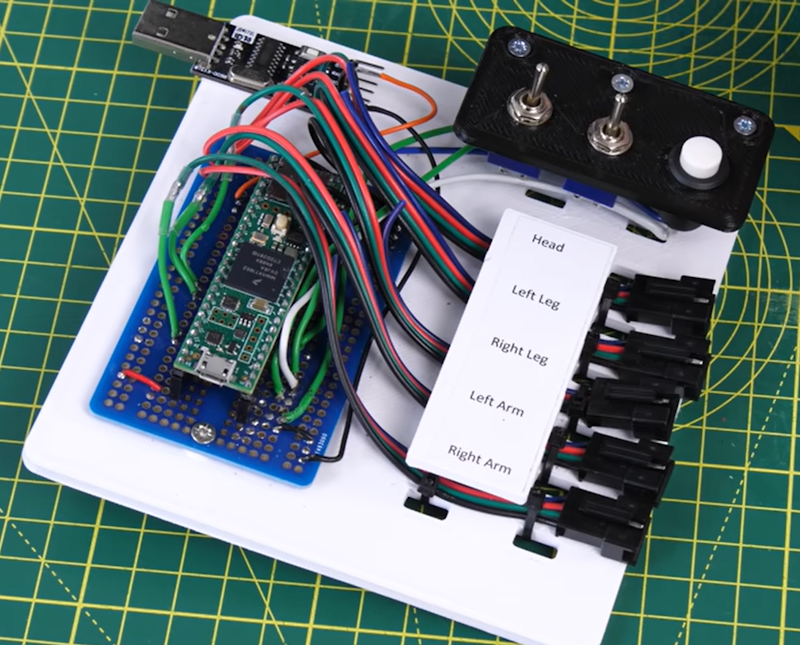

The motion capture solution is based on five Teensy LC boards with TDK MPU-6050 six-axis gyro/accelerometer sensors for motion, with the four “limb” units also adding AMS AS5047 magnetic rotary position sensors for joint position. A central Teensy 4.1 connected to each of them orchestrates data collection. Dynamixel servo actuators power the arm using an Arduino MKR-style shield connected to the 4.1. After training the system using all of his real limbs, James then swapped in the 3d-printed prosthetic for his right arm, and it continued to mimic the poses used in training, as you can see in the video below!